Counting unique visitors without cookies

In a disk-space and privacy-friendly way

Summary:

The common problem of counting unique visitors to a page is often solved by

storing cookies in the visiting browser. Storing all information about visitors

server-side is possible, but takes a lot of disk space and computation. Bloom

filters present an alternative that should be fast enough even for sites behind

high-performance caching systems to implement.

Update 2011-10-30: There is a better method.

Please read Estimating unique visitors in larger

numbers for a description.

Update 2018-03-17: This article hasn't aged well; better information about bloom filters is easy to find, and browser fingerprinting is such a concern now that it's the subject of academic research and a reason to use specialized browser versions to defeat it.

Bloom filters

Bloom filters are data structures that store a set of elements without storing all the elements themselves. They do this by discarding a lot of information about the individual elements, and thereby introducing uncertainty into the equation. Maybe a particular element is a member of the set. There's no way to be certain. But we can be certain when an element is not a member of the set.

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 |

In the bloom filter pictured, we're extracting information from the elements (which happen to be numbers in the range 0 to 99) in two steps. First we use the first number as an index into the array, then the second number. In both steps, we set the value at that position to ‘one’, if it isn't ‘one’ already.

In this case, we don't know if the value in the set is 47 or 74. Or both. But we know for certain that 11 is not in the set. And neither is 24. If they were, the bits at positions one and two would be ‘one’ as well.

If we'd taken just one digit to use as an index, the chance of any two random numbers in the range 0 to 99 'colliding' would be about one in ten. Since we use two, the chances are one in a hundred. If we'd used three-digit numbers, the chances are closer to one in thousand.

Of course, we can't store all values from 0 to 999, or even 0 to 50 in just ten bits. After just a few values, the bit array would fill up completely, and suddenly the set would store all values. The exact limits for bloom filters, as well as many other things, are documented in the wikipedia article about them.

What to feed the filters?

In the example above, we've used a ten-element bit array, so we could use a number from one to ten as an index for it. But the input needn't be numbers. If we choose the bit array length so that it's a power of two, we can use anything, as long as

- It can be expressed as a sequence of bits

- We can expect those bits to be evenly distributed across the bit array

The second criterium is rather important, as much of the assumptions about a bloom filter's accuracy depend on it. However, it's also very hard to accomplish. Ultimately, we just don't know exactly what the distribution of inputs is going to be like beforehand.

Once we can identify whether we've seen something before, counting the unique number of anything becomes a snap. Seen it before? Don't count it (or maybe only count the tiny probability that our filter is wrong). If it's new, we count it.

IP addresses

IP addresses contain 32 or more bits. They are distributed roughly according to geography, though.

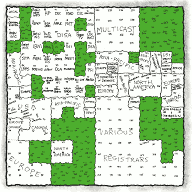

Randall Munroe's map of the internet, pictured above, explains

this visually in a better way than I ever could. Since the visitors to your site will often be

from a certain geographical (or even professional) area, there's a chance that a few top-level

allocations will be overrepresented, while others won't be seen at all.

But if we disregard the top eight bits, we're left with twenty-four, or two × twelve bits;

Two indices for a 4096-bit bloom filter. Enough to count up to a thousand visitors with reasonable

accuracy.

IPv6? That's probably a bit more difficult. And perhaps a subject for a follow-up.

‘Leaked’ browser information

The American Electronic Frontier Foundation has recently started the rather nifty Panopticlick project. Your browser (and nearly everyone's browser) conveys minute details about its version, its operating system's version and its language settings with every HTTP request. The EFF argues that this is a privacy risk; that this information uniquely identifies your computer to anyone whose site you visit. And they're right.

They've also expressed the information value of these details as bits, which gave me the idea to write this article in the first place.

Assuming we can efficiently extract the entropy from the User-Agent values and others, we have almost ideal indices for a bloom filter; tied to a particular browser and computer, rather than to an IP address, just like cookies. And we don't actually have to store the specifics of someone's visit. We can just compress that information into a few kilobytes (at worst) of bits.

In summary

The method described here has been implemented, and works reasonably well in practice. It has obvious limitations, the most restrictive one being the one imposed by the available entropy (uniquely identifying bits), which imposes limits on the size of the filter and, ultimately, on the number of unique visitors you can count. Using Stable bloom filters (Deng, Rafiei) is an obvious workaround for this problem, gently discarding information about visitors in the past.

Is it a serious alternative to just setting a cookie? Obviously not. But it is a fairly easily-implemented, computationally inexpensive alternative for when cookies can be trusted, and it should demonstrate (as if the warning given by the EFF wasn't enough) That disabling cookies in a browser isn't enough to ensure privacy.